SPBench workloads

Table of Contents

Workload Classes

SPBench provides different classes of input workloads for each application. In this version all applications have the classes test, small, medium, large, and huge.

Note that the input workloads provided by the SPBench need to be downloaded from a remote repository before first use. To do this, you must run the download-inputs command.

./spbench download-inputs

This command will download all input classes for all applications. It may take several minutes for large workloads, depending on your internet speed.

You can choose to download the workload classes for a single application.

./spbench download-inputs -app <application_name>

And you can also select a single workload class.

./spbench download-inputs -app <application_name> -class <workload_class>

For more info, run:

./spbench download-inputs -h

Lane Detection

The input workload for the Lane Detection application is a single video file. The video is a recording from a truck driving on a road. It is an mp4 video with 640×360 or 1280×720 resolution with 30 frames per second (H.264 codec). This same video is used for all workload classes, but at different lengths between them.

Test class: 3-second video (LQ = 0.57 MB and HQ = 2 MB)

Small class: 15-second video (LQ = 5.5 MB and HQ = 14.8 MB)

Medium class: 30-second video (LQ = 12 MB and HQ = 32.1 MB)

Large class: 60-second video (LQ = 25.4 MB and HQ = 66.1 MB)

Huge class: 120-second video (LQ = 50 MB and HQ = 130 MB)

The default resolution is 360p. To access the 720p videos users can add a ‘-HQ’ sufix (e.g. large-HQ).

Lane Detection input illustration

Bzip2

The input workloads for Bzip2 are dump files from the wikipedia database. The files are as follows:

Test class: enwiki-20211120-pages-articles-multistream-index9.txt (16.8 MB)

Small class: enwiki-20220601-pages-articles-multistream15.xml (129.7 MB)

Medium class: enwiki-20211120-all-titles-in-ns0 (349.1 MB)

Large class: enwiki-20211120-pages-articles-multistream9 (2.1 GB)

Huge class: enwiki-20211120-pages-articles-multistream9-2x.xml (4.2 GB)

Users can use the class names with the ‘_d’ sufix (e.g. large_d) to access the compressed versions for running the benchmarks in decompress mode.

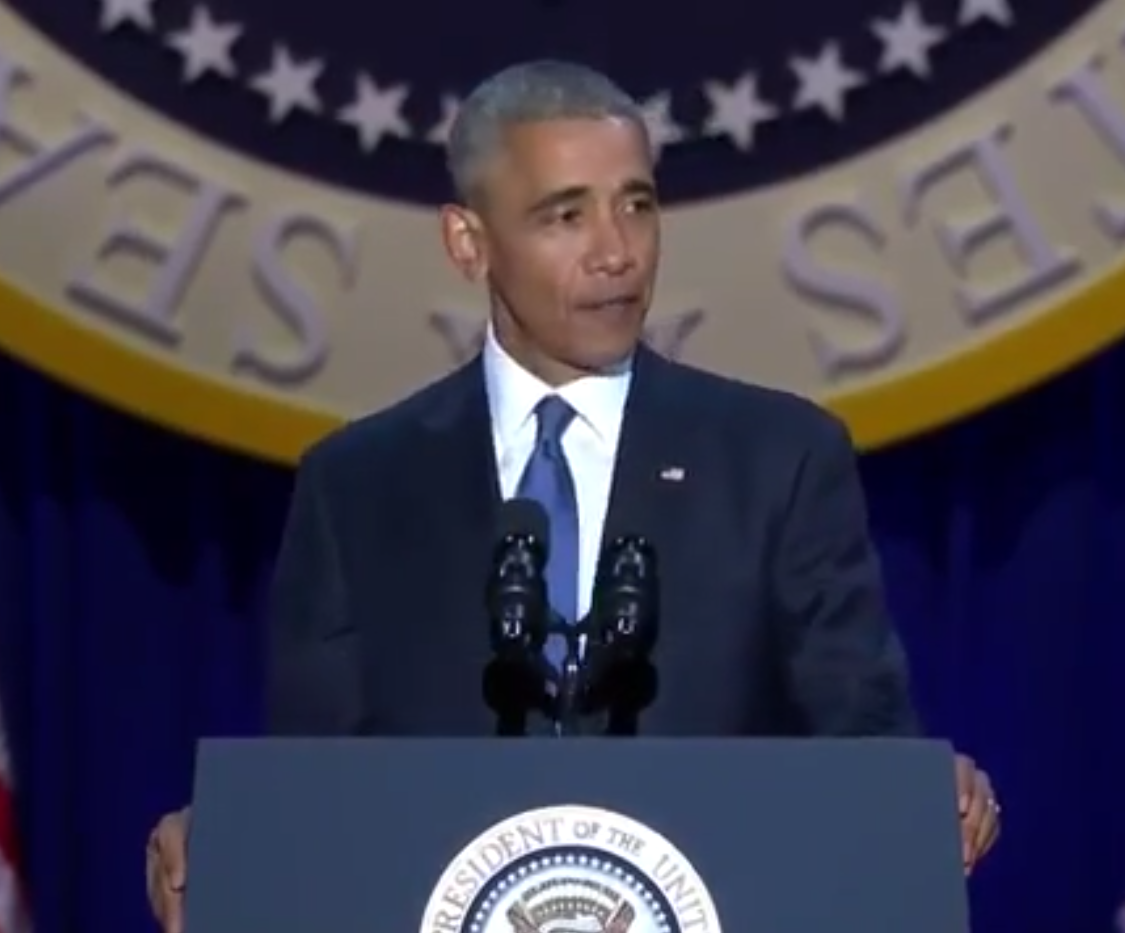

Person Recognition

The Person Recognition input files consist of a set of pictures of former US president Barack Obama’s face plus a video recorded during a talk that contains images of his face and also the faces of other people in the audience at some points. The set of pictures is used for training the application. The video (360p resolution) is used to try to recognize the face of the former president.

All workload classes use the same video, but at different lengths, as described below:

Test class: 0.3-second video

Small class: 1-second video

Medium class: 3-second video

Large class: 15-second video

Huge class: 30-second video

Person Recognition input illustration

Ferret

For Ferret the original workloads available on the PARSEC website are used.

In SPBench the ‘native’ workload is available under the ‘huge’ alias.

Using External Inputs

To run an application with an input that is not provided by the SPBench, you must first register that input. For this you can use the new-input command (new-input).

E.g. ./spbench -new-input -id <my_input_name> -app <spbench_app> -input-string <"my_input_string">

Adding Result Checking

The SPBench applications allow the result to be checked at the end of the execution. This is done by comparing the md5 hash of the benchmark output with a pre-computed md5 hash stored in the input register. Therefore, to add this result check also for a new external input you have registered, we recommend that you first run a benchmark in the sequential version with this input, to have a more reliable result file.

To add the md5 hash you can use the -md5 argument.

E.g. ./spbench -new-input ... -md5 <expected_md5_hash_to_check>

Warning

It is this resulting output file that you should use to compute the md5 hash you will add to the input log, not the original file.

Workload Characterization

Forthcoming…